How to fix common problems with Materialized Views in ClickHouse

Written by

Armend Avdijaj

Jun 18, 2025

Introduction

Materialized Views are ClickHouse’s Swiss Army knife for real-time data transformation. They promise efficient aggregations, replication, and denormalization. Yet, as data velocity and complexity grow, cracks emerge. Stale historical data, deduplication chaos, and Kafka join failures plague even seasoned engineers.

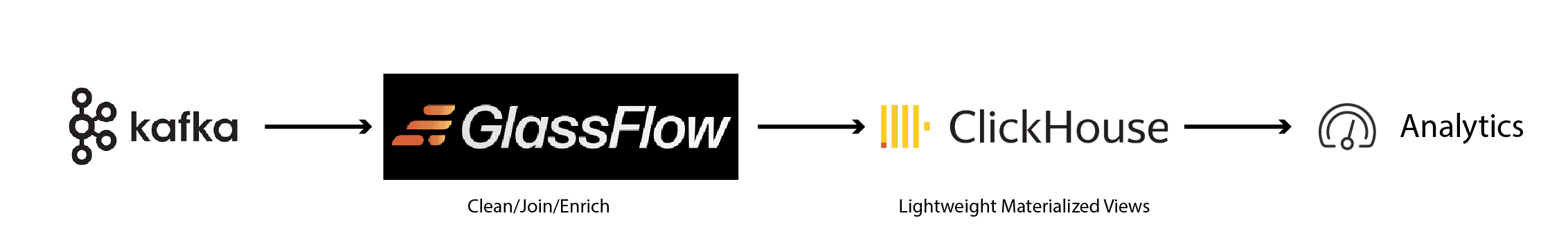

Enter GlassFlow—a Python-based ETL engine designed to augment ClickHouse by offloading streaming transformations before data hits Materialized Views. Let’s dissect how this synergy solves ClickHouse’s most stubborn Materialized View limitations.

The Critical Limitations of ClickHouse Materialized Views

ClickHouse Materialized Views excel at incremental processing but falter under advanced use cases. We will encounter several concerns.

The first problem we will encounter is historical data blind spots. Materialized Views only process new data blocks. Reprocessing historical data requires manual ALTER TABLE ... REFRESH. This is a resource-heavy operation that can stall analytics.

We will also encounter a dilemma with the deduplication process. ClickHouse offers the ReplacingMergeTree table engine for deduplication. However, this process occurs in the background at an unknown time, and we can't plan for this (source). The ReplacingMergeTree engines handle duplicates eventually, but transient duplicates force engineers to choose between slow FINAL queries or inaccurate results. See

We will find out stream joins are impossible. Materialized Views can't join two Kafka streams in real time. Workarounds like pre-joined topics or denormalized tables create pipeline debt.

And finally, ClickHouse can become the bottleneck. Constantly running deduplication logic within ClickHouse for every micro-batch can add significant load to the database, potentially impacting query performance and ingestion speed, especially if the source table for the Materialized View is also the primary ingestion point. On top of that, if you add complex transformations like JSON parsing and geospatial enrichment, this will consume CPU cycles needed for analytical queries, further degrading overall performance.

Note: If you want to learn more about Materialized Views, please read our previous article ClickHouse Materialized Views.

Streamlining Data Transformation for ClickHouse using GlassFlow

As the limitations of ClickHouse Materialized Views become evident, particularly in streaming contexts, the need for a more specialized and flexible approach to data preparation becomes clear. GlassFlow emerges as such a solution, an ETL (Extract, Transform, Load) tool meticulously engineered as a real-time stream processor tailored for data pipelines flowing from Kafka to ClickHouse. Its primary objective is to simplify the creation and management of these pipelines, acting as a crucial intermediary processing layer that transforms Kafka streams before they are ingested into ClickHouse.

Core Architecture and Principles

GlassFlow is built with data engineers in mind, addressing common pain points in streaming data integration. It is designed to handle late-arriving events, ensure exactly-once processing semantics for data correctness, and scale effectively with high-throughput data streams. A key aspect of its design is user-friendliness; it provides a web-based interface for configuring and monitoring data pipelines, abstracting away much of the underlying complexity.

Key Capabilities Relevant to Overcoming Materialized View Limitations

GlassFlow's feature set directly targets many of the challenges encountered with ClickHouse Materialized Views, especially when dealing with Kafka as a data source.

Real-time Streaming Deduplication. A common issue with streaming systems like Kafka (which often guarantees at least one delivery) is the potential for duplicate messages. GlassFlow provides real-time deduplication of Kafka streams before the data reaches ClickHouse. Users can configure deduplication keys (specific fields within the data) and a time window (e.g., up to 7 days) over which uniqueness is checked. This ensures that only the first occurrence of an event (based on the key within the window) is forwarded, preventing redundant data from being ingested and stored in ClickHouse.

Effortless Temporal Stream Joins. Analyzing streaming data often requires correlating information from multiple streams based on common keys and time proximity. GlassFlow facilitates temporal joins between two or more Kafka streams in real time. Through its UI, users can configure the streams to be joined, the join keys, and the time window for matching events across streams. The output is a new, enriched stream ready for ingestion into ClickHouse, containing data combined from the source streams.

Managed Kafka Connector and Optimized ClickHouse Sink. GlassFlow includes a built-in Kafka connector that handles automatic data extraction from specified Kafka topics, supporting multiple topics and partitions and natively parsing JSON data types. Some implementations leverage a NATS-Kafka Bridge for this connectivity. On the destination side, it features an optimized ClickHouse sink that uses a native connection for maximum performance. This sink allows for configurable batch sizes for efficient data ingestion and adjustable wait times for throughput optimization. It also includes built-in retry mechanisms to handle transient failures, ensuring data is not lost. It can also manage automatic schema detection for JSON data when writing to ClickHouse.

Stateful Processing. Critical for features like deduplication and temporal joins over time windows, GlassFlow incorporates a built-in lightweight state store. This enables low-latency, in-memory processing for these stateful operations, retaining context within the specified time window. This state management is also crucial for correctly handling late-arriving events, ensuring they can still be processed and joined appropriately.

Native Materialized View vs GlassFlow + ClickHouse

Capability | ClickHouse MV Alone | GlassFlow + ClickHouse |

|---|---|---|

Historical Data Reprocessing | Manual | Automatic backfills |

Duplicate Handling | Eventual consistency | Exactly-once in pipeline |

Multi-Stream Joins | Not supported | Supported |

Compute Load | On ClickHouse nodes | Offloaded to serverless |

Data Freshness | Seconds-minutes | Sub-second |

Illustrative Use Cases

To make the benefits of using GlassFlow with ClickHouse more concrete, consider these illustrative scenarios. These examples highlight situations where ClickHouse Materialized Views would face significant challenges, but GlassFlow can provide an effective solution, particularly where the data originates from Kafka streams and requires stateful processing or multi-stream correlation before analytical querying in ClickHouse.

Scenario 1: Real-Time User Activity Analysis with Multiple Event Streams

Challenge: An e-commerce platform aims to perform real-time analysis of user behavior by correlating various interaction events. These events, such as page_views, add_to_cart actions, and purchase_confirmations, are streamed into separate Kafka topics. For comprehensive analysis, these disparate event streams need to be joined by user_ID and session_ID within specific time windows to reconstruct user journeys. Additionally, due to at-least-once delivery semantics in Kafka or client-side retries, duplicate events are possible and must be eliminated. Implementing this with ClickHouse Materialized Views would be problematic due to their limitations with multi-stream joins (especially for incremental Materialized Views) and the complexities of performing efficient, stateful deduplication across different event types within the database itself.

GlassFlow Solution:

GlassFlow is configured to consume the

page_views,add_to_cart, andpurchase_confirmationsstreams from their respective Kafka topics.A GlassFlow pipeline is set up to perform temporal joins on these streams, using

user_IDandsession_IDas join keys, and a defined time window (e.g., 30 minutes for a session) to correlate related events.Another GlassFlow processing step (or integrated within the join pipeline) handles deduplication of the combined, enriched stream based on unique event identifiers (e.g.,

event_ID) to ensure each distinct user action is counted only once.The resulting stream of enriched, deduplicated user activity data is then efficiently loaded by GlassFlow's ClickHouse sink into a clean, well-structured table in ClickHouse, ready for complex behavioral analytics queries.

Benefit: This approach enables sophisticated user journey analysis in ClickHouse using data from multiple event streams, without being constrained by the join and deduplication limitations of ClickHouse MVs. ClickHouse receives data that is already prepared for immediate analytical use.

Scenario 2: IoT Data Ingestion and Aggregation from Distributed Sensors

Challenge: An industrial IoT application collects telemetry data from thousands of sensors deployed across various facilities. This data, including metrics like temperature, pressure, and vibration, arrives in many small, frequent batches via Kafka. Before this data can be aggregated in ClickHouse for trend analysis, anomaly detection, and predictive maintenance, it requires deduplication (as sensors might retransmit data due to network issues) and potentially some initial filtering or simple transformations (e.g., unit conversions, discarding invalid readings). Attempting to use ClickHouse Materialized Views for these pre-processing steps on such high-volume, high-frequency small batches could lead to severe insert performance degradation in the source table and excessive MV update overhead, potentially destabilizing the ClickHouse cluster.

GlassFlow Solution:

GlassFlow consumes the raw sensor data streams from the relevant Kafka topics.

It applies streaming deduplication rules, perhaps based on a composite key of

device_IDand a narrowtimestampproximity, to filter out redundant telemetry signals.GlassFlow can also perform basic filtering operations, such as removing readings that fall outside plausible ranges, or apply simple schema mapping if sensor data formats vary slightly.

GlassFlow then batches the cleaned and validated sensor data and uses its optimized sink to efficiently ingest it into ClickHouse tables designed for time-series analysis and aggregation.

Benefit: This ensures that ClickHouse ingests only clean, optimized, and deduplicated sensor data, preventing MV-related performance bottlenecks that would arise from processing raw, high-frequency small batches. ClickHouse can then focus its resources on performing fast aggregations, time-series functions, and complex analytical queries on the prepared data, improving the reliability and responsiveness of the IoT analytics platform.

These use cases underscore that GlassFlow's value is particularly pronounced in scenarios where the data source is inherently stream-based, like Kafka, and the data requires stateful processing (such as time-windowed deduplication) or correlation across multiple streams (like temporal joins) before it is subjected to analytical querying in ClickHouse. These are precisely the types of operations that are complex or inefficient to handle with ClickHouse Materialized Views but are core strengths of GlassFlow's design.

Note: If you want to learn about how to implement this, please read our previous article title How to Build a Streaming Deduplication Pipeline. The GlassFlow ETL Github Repo also have several demos you can play with.

Conclusion

While ClickHouse is a powerful analytics engine, its native Materialized Views struggle with the demands of modern streaming data from sources like Kafka, facing limitations with data freshness, complex transformations, and operational overhead. GlassFlow presents a strategic solution by acting as a dedicated stream processing ETL tool that sits between Kafka and ClickHouse. By offloading tasks like real-time deduplication and multi-stream temporal joins, it prepares and refines data before it reaches the database. This decoupled approach directly addresses the core weaknesses of Materialized Views and yields significant benefits, including improved data quality, enhanced transformation capabilities, greater development agility, and a reduced computational burden on the ClickHouse cluster.

This shift towards upstream data preparation represents a more mature, best-of-breed data architecture where specialized tools are leveraged for their core strengths. This separation of concerns is critical for the future of high-performance analytics, as it ensures that powerful engines like ClickHouse receive clean, analysis-ready data. By handling the complexities of raw data streams, tools like GlassFlow allow organizations to maximize the performance of their data infrastructure and unlock its full potential as data velocity and complexity continue to grow.

ClickHouse materialized views remain indispensable—but their limits are real. GlassFlow isn’t a replacement; it’s a force multiplier. By handling stateful operations like joins, deduplication, and enrichment before ClickHouse, Materialized Views regain their original promise: simple, fast aggregations.

Stop wrestling with ReplacingMergeTree and FINAL clauses. Deploy a GlassFlow pipeline today, and let ClickHouse do what it does best: blisteringly fast analytics.