Boost your knowledge

with Glassflow

Stay informed about new features, explore use cases, and learn how to build real-time data pipelines with GlassFlow.

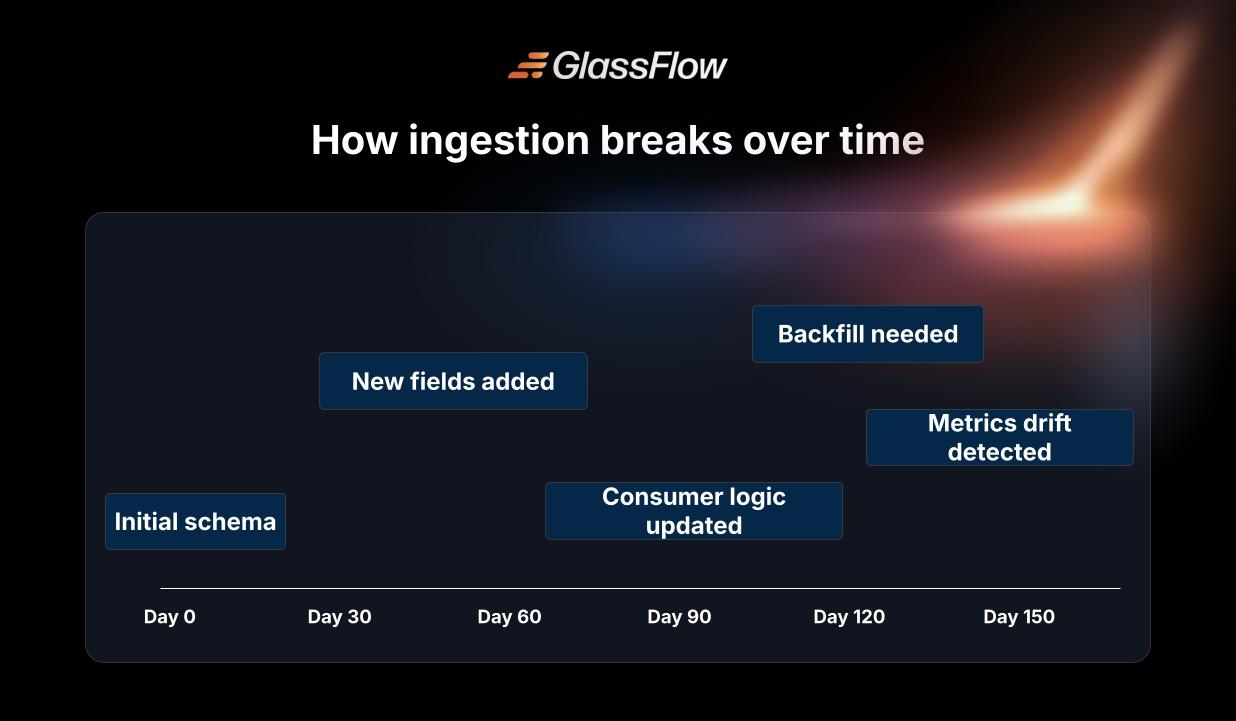

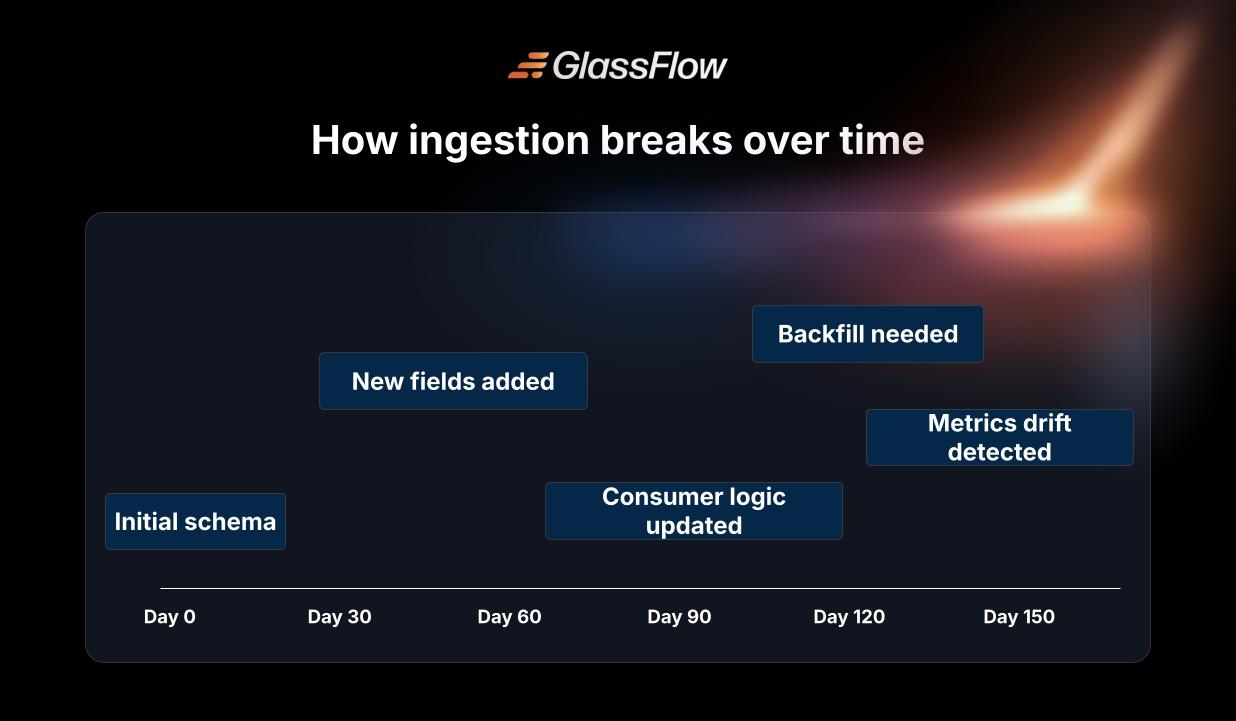

Kafka to ClickHouse breaks quietly in AI systems

Learn how AI systems push Kafka to ClickHouse beyond what most pipelines were built for.

Written by

Armend Avdijaj

Jan 21, 2026

Read More

Search

Cleaned Kafka Streams for ClickHouse

Clean Data. No maintenance. Less load for ClickHouse.

Cleaned Kafka Streams for ClickHouse

Clean Data. No maintenance. Less load for ClickHouse.